Not long ago, I was auditing a codebase for work (looking for bugs) when I realized that despite the quality of the code, I was becoming mentally fatigued extremely quickly and had a hard time working on it for long stretches of time…

I ended up figuring out what made this codebase so difficult to stick with, but it didn’t turn out to be what I expected (Cyclomatic Complexity). After doing a little reflection and research, it ended up being something more related to readability–something I didn’t have a lot of data on, but was curious to learn if there was objective terminology or common metrics.

So today we’re jumping into the results of that investigation, which means instead of visualizing code, we’re talking about visual patterns of code, specifically the ones that make my brain hurt!

Be warned! Tis a murky and ill-defined realm… some of the data points include popular metrics, academic papers, and practical opinions (including my own), but by the end of the search we’ll distill it down to 8 visually-observable properties that can help programmers of any language improve the readability of code.

Code readability metrics and alternate complexity metrics#

Let me be upfront: there is no commonly-used and accepted metric for code readability.

All I could find was 1) academic papers that didn’t seem to be used by the real world and 2) opinions… when I was looking for something more tangible.

Since I wasn’t looking to invent my own metric, my goal was to collect a set of visual patterns that anyone could use in a discussion about what makes code easier to read.

For example, take the examples below where the primary difference is just the shape or pattern of logic. Is any one of them the easiest to read? Or are some of them objectively worse to read?

The differences between some of the examples above are a matter of preference, but it is interesting to look for patterns that point out the “bad” ones… especially if those are easy to spot visually, with no special tools required!

My first thought for finding such patterns was to look at complexity metrics, but interestingly there’s not a huge number of well-known software complexity metrics or academic readability ideas that fit what I was looking for, specifically measures that:

- Work on source code snippets or a single function

- Don’t focus on “core complexity”: certain measurements like cyclomatic complexity are to some degree inseparable from the algorithm being implemented

- Don’t focus on superficial style factors: length of variable names, whitespace usage, choices of indentation or brackets/parens placement, etc.

But the good news was that there was two metrics that seem to be in use that did fit the criteria, so that’s where the journey begins.

Halstead Complexity Metrics#

In the late 70’s, a fellow named Maurice Halstead came up with a set of metrics in an attempt to build empirical measures of source code. This means they are translatable across languages and platforms, which is really nice for our purpose because they focus on how something is written rather than the complexity of underlying algorithms being described.

The root of his metrics was four measurements based around counting operators and operands:

- Number of distinct operators (

n1) - Number of distinct operands (

n2) - Number of total operators (

N1) - Number of total operands (

N2)

Halstead’s focus was building a system of related metrics such as program “length”, “volume”, and “difficulty” with a series of equations describing the relationships between them… ambitiously culminating in a numerical value that would estimate the number of bugs contained in the implementation!

These metrics definitely are debatable (they were made in the 70’s…), but they give us numbers that are a starting point for at least comparison, and the reasoning seems to make sense

Intuitively, the more operators in play, the more one has to reason about potential interactions. Similarly, a larger number of operands means understanding possibilities of dataflow become more complex.

Halstead Complexity Javascript Example#

If we go back to our early example we can make two variations that attempt to be on separate ends of the spectrum in terms of operands and operators, and we can see how that affects two of the Halstead metrics, Volume and Difficulty.

function getOddnessA(n) {

if (n % 2)

return 'Odd';

return 'Even';

}

// Halstead metrics from: https://ftaproject.dev/playground

// operators: 4 unique, 7 total

// operands: 5 unique, 6 total

// "Volume" 33.30

// "Difficulty" 2.50

function getOddnessB(n) {

const evenOdd = ['Odd', 'Even'];

const index = Number(n % 2 === 0);

return evenOdd[index];

}

// operators: 7 unique, 10 total

// operands: 9 unique, 12 total

// "Volume" 71.35

// "Difficulty" 3.75

You can tell just by looking that the first one has fewer operators and operands and is a bit easier to read, and the Halstead Volume and Difficulty numbers confirm this. That’s kinda nice!

The big downside here is that it’s not well-defined what exactly counts as an operator or operand for all languages, so it’s best to just use a specific tool or implementation for measurement and stick with it. I used this site for the measurements reported above, whereas the picture below shows my estimation of trying to color code operators/operands to show visually that fewer colors is simpler:

Halstead Complexity Takeaways#

From a practical standpoint, these metrics suggest a couple of useful patterns to me:

- Smaller functions with fewer variables are generally easier to read, and redundant variables are only for when you hate the people that read your code

- Prefer to not use language-specific operators or syntactic sugars, since additional constructs are a tax on the reader. Try reading someone else’s Perl or Ruby if this doesn’t resonate with you.

- Chaining together

map/reduce/filterand other functional programming constructs (lambdas, iterators, comprehensions) may be concise, but long/multiple chains hurt readability- This seems more common in Javascript and Rust, or when a Python programmer gets fascinated with itertools

As mentioned, the Halstead metrics have been debated fiercely in academic and other circles, but we don’t need to wait for science.

Pfft, we can take the ideas and run!

“Cognitive Complexity”#

A more recent metric developed in an effort to more accurately capture the difficulty of reading is a measure called “Cognitive Complexity” developed by SonarSource, a company that makes static analysis products.

Some people find the name of the metric misleading, feeling that it makes the measure sound more scientific or objective, rather than naming it something that sounds like a heuristic developed from experience.

Being a practitioner who really only cares about effectiveness, I’ll take good metrics with bad names over bad metrics, and I have respect for heuristics as long as they work!

The authors break down Cognitive Complexity into three ideas:

- Shorthand constructs that combine statements decreases difficulty

- Each break from linear flow increases difficulty

- Nested control flow increases difficulty

Seems straightforward and reasonable, but the whitepaper goes into much more depth than just three points, so let’s get into the meat of this to see what a group of static analysis developers believe hurt readability.

Shorthand Constructs#

For the first principle, their whitepaper uses the following two snippets, arguing that the first increases difficulty for the reader while the second doesn’t:

// 1

MyObj myObj = null;

if (a != null) {

myObj = a.myObj;

}

// 2

MyObj myObj = a?.myObj;

The second is shorter and takes less time to read, but I’d argue that in the second construct there’s a better chance that the programmer forgets to properly handle all of the possibilities… case in point, these code snippets aren’t actually equivalent!

In the first case myObj will either be a.myObj or null and in the second it will be a.myObj or undefined!

This may seem like splitting hairs, but if I’ve got my bug-hunting hat on, I look for the small unexpected behaviors like this to see if I can leverage them into something useful!

Even using languages with solid type-checking like TypeScript or Rust, they only reduce the chance you omit handling such a case, but it doesn’t mean that you handle it correctly in all cases.

Without the assistance of type checking, like in vanilla JavaScript, the chances of that corner case not being handled are much higher. So while I won’t argue that shorthand constructs are simpler to write and easier to read, there’s still some tradeoff in terms of conciseness and density.

Linear Flow Breaks#

One thing that seems obvious is that “linear” code with no conditionals is easier to scan than code with conditionals, and is a big focus for Cognitive Complexity.

Naturally they assess additional difficulty for any conditionals, loops, or gotos, but they also get a bit fancier and include conditional macros, try/except blocks, sequences of logical operators, and recursion.

I totally agree that each of these things generally affect readability, but I think there are four fine points worth talking about.

Point 1. They count switch statements as a single group, but they penalize each additional else-if in a chain because each else-if can make more than one comparison, even the one in question doesn’t.

Don’t get me wrong, I think switches look better than if-else chains, but they aren’t innocent either; switch case fall-throughs definitely increase reading difficulty and missing breaks have caused needless headaches for many programmers, myself included!

// Switches are preferred by this metric

function getSign1(n) {

switch (math.sign(n)) {

case -1:

return 'negative';

case 1:

return 'positive';

default:

return 'other';

}

}

// It's true that else-if doesn't look as nice when each case returns

function getSign2() {

if (math.sign(n) == -1) {

return 'negative';

} else if (math.sign(n) == 1) {

return 'positive';

} else {

return 'other';

}

}

// but if the switch uses "break", the advantage decreases...

function getSign3(n) {

let sign = '';

switch (math.sign(n)) {

case -1:

sign = 'negative';

break;

case 1:

sign = 'positive';

break;

default:

sign = 'other';

}

return sign;

}

Point 2. They count sequences of logical operators in a conditional, meaning that any length chain && and || would count as one, but mixing them would count as increased difficulty.

This actually feels pretty right on, and I think this addresses how some conditionals are more difficult to read without being much longer:

// this conditional only adds 1 point

if (debug || verbose || consoleMode) { ... }

// this conditional adds 2, and not because of the indent

if (debug ||

(verbose && consoleMode)) { ... }

// this conditional adds 3:

if (debug ||

!(verbose && consoleMode)) { ... }

Point 3. Exception handling–something I think is commonly overlooked but can really be hard on a reader if not handled well.

In Cognitive Complexity, try/catch blocks increase the difficulty score, but multiple catch blocks aren’t considered harder than just one, and try and finally blocks are ignored.

I don’t want to get caught up on every point, but one important thing I think this leaves out is the readability cost of throwing exceptions. When exception handling crosses function boundaries, it essentially interleaves the complexity of the involved functions.

function divideBy7(n):

if (n <= 0) {

throw Error(`divideBy7 expects positive numbers, got ${n}`);

// aggressive exception throwing hurts readability because

// now the reader has to search and find where this might be caught

}

return parseInt(n / 7)

Point 4. is that this metric counts any goto (aka “jump-to-label”) as always increasing difficulty; seems reasonable, right?

But there is one particular goto structure (usually “goto out” or “goto done”, etc) that some experts seem to agree can be helpful for concisely dealing with cleanup handling on error conditions.

Conversely, if a goto crosses a loop boundary in an unexpected way (e.g. something not achievable with a plain continue or break), it forces the reader to re-create and understand this new and unexpected control flow… and that is always taxing to read, even if the structure and intent is logical.

My opinion is that a goto can be as harmless as any other conditional in some cases, but in other cases they are much worse than adding an extra if statement.

Nested Conditionals/Loops#

Since conditionals are harder to read, nested conditionals are worse, right?

The authors of this metric definitely believe so, doubling down and adding an additional point of difficult for each level of nesting (in addition to points for conditionals, loops, etc), as they show with a Java example:

void myMethod () {

try {

if (condition1) { // +1

for (int i = 0; i < 10; i++) { // +2 (nesting=1)

while (condition2) { … } // +3 (nesting=2)

}

}

} catch (ExcepType1 | ExcepType2 e) { // +1

if (condition2) { … } // +2 (nesting=1)

}

}

// Cognitive complexity 9

This is actually a common idea, one I agree with and have heard other practitioners call it things like the “Level of Indentation” or “Bumpy Road” metric. Hard to disagree with the premise that nested conditional logic is harder to read, especially if the level of nesting goes beyond 2:

function logIntegerDivA(x: number, y: number) {

if (debug) {

if (x != 0) {

if (y != 0) {

console.log(Math.floor(x/y));

}

}

}

}

// vs

function logIntegerDivB(x: number, y: number) {

if (debug === false) {

return;

}

if ((x == 0) || (y == 0)) {

return;

}

console.log(Math.floor(x/y));

}

We can talk about how many points different constructs are warranted, but our goal is to improve code, not come up with our own metric so we can sell a static analysis tool.

To wrap this part up, we see that Cognitive Complexity raises a lot of great discussion points on what affects readability and could be an effective heuristic, but it has its flaws.

For example, one interesting omission is that it doesn’t directly account for the length of a function… because all else being equal, long functions are generally more effort to read than short ones.

And since the company that invented it doesn’t advertise an open-source reference implementation for common languages, it’s best to take the ideas that we find helpful and continue on.

Function shape, patterns, and variables#

A common theme when talking about visual patterns of code is to reference the “shape” of a function (referring to more than just indentation) and how it uses variables.

While there are a number of academic papers around variable use and understanding, “shape” and “pattern” aren’t well-defined terms, so I couldn’t find any that effectively captured more than one piece of what I find to be three important factors for readability:

- Good names are vitally important, and variable shadowing is terrible

- Prefer shorter variable liveness durations

- Familiar variable usage patterns are easier to understand than novel ones

Distinct and Descriptive Names#

I hesitate to include the first one, because it’s obvious that descriptive names help someone understand what code is supposed to do, and duplicative or cryptic names do the opposite.

But there’s actually two facets of this idea that I feel are more related to “shape” or usage than meets the eye:

- Variable shadowing is dangerous; any place where the reader has to think about scope rules in order to deconflict which version of a variable is being used should be changed

- Prefer visually distinct names (e.g. have you ever mistaken

iforjoritemforitems?)- I once audited a codebase where one module’s author had used three variations of same variable name in a single function, (e.g.

node,_node, andthisNode)… unsurprisingly this component was rife with bugs that had security impacts on the larger system

- I once audited a codebase where one module’s author had used three variations of same variable name in a single function, (e.g.

Shorter-lived Variables#

The second idea refers to “live variable analysis”, which looks at the span between where a variable is first written and the last place it could possibly be used. This topic seems to be reserved for compiler classes, but it’s an intuitive thing: long liveness durations force the reader to do keep more possible variables and variables in their head.

// this version declares variables at the top,

function fibonacciA(n: number): number {

let fibN = n;

let fibNMinus1 = 1;

let fibNMinus2 = 0;

// live: n, fibN, fibNMinus1, fibNMinus2

if (n === 0) {

return 0;

} else if (n === 1) {

return 1;

}

// live: n, fibN, fibNMinus1, fibNMinus2

for (let i = 2; i <= n; i++) {

fibN = fibNMinus1 + fibNMinus2;

fibNMinus2 = fibNMinus1;

fibNMinus1 = fibN;

}

// live: fibN

return fibN;

}

// this reduces the live range of the three local variables

function fibonacciA(n: number): number {

// live: n

if (n === 0) {

return 0;

} else if (n === 1) {

return 1;

}

// these three only become live right before their use

let fibN = n;

let fibNMinus1 = 1;

let fibNMinus2 = 0;

for (let i = 2; i <= n; i++) {

fibN = fibNMinus1 + fibNMinus2;

fibNMinus2 = fibNMinus1;

fibNMinus1 = fibN;

}

return fibN;

}

For this, don’t be concerned about the exact definition, because while it’s partly about the difficulty of mental dataflow, it’s also about locality and how far away the various uses of a variable are from each other.

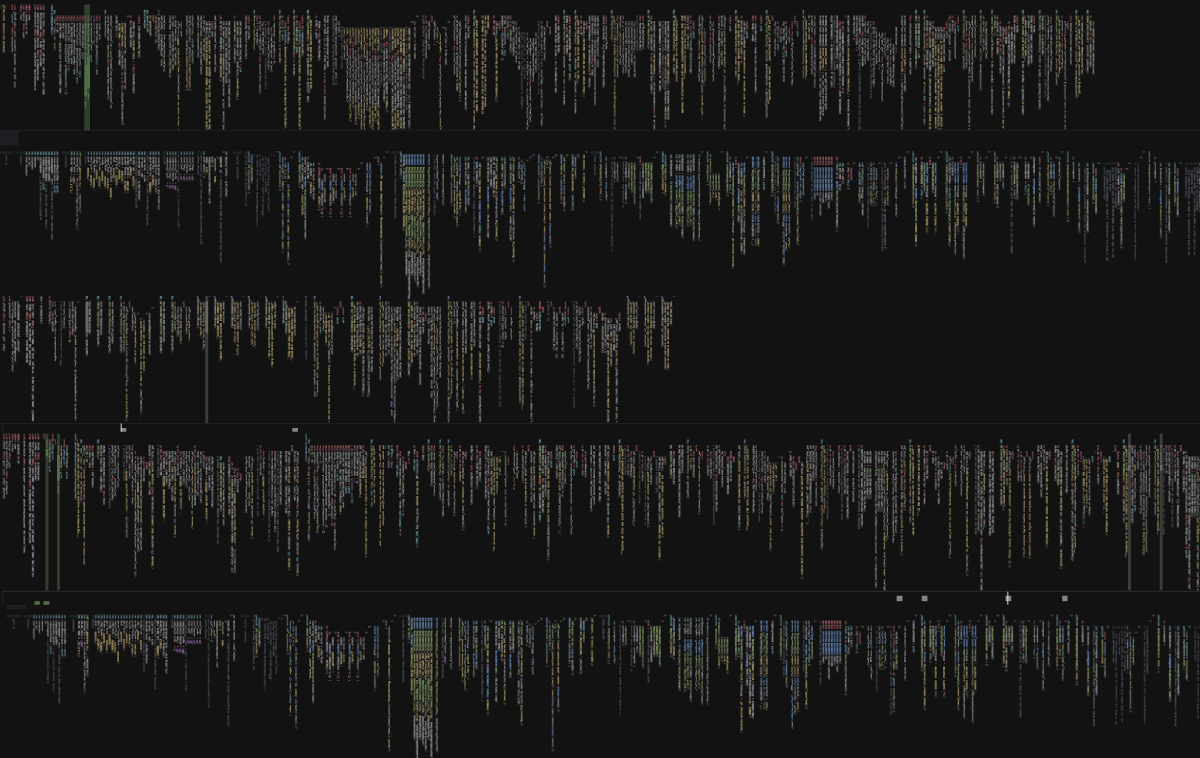

The picture below shows a span of uses which approximates the liveness analysis and shows the difference even for a fairly small and contrived example.

The worst case is a variable whose liveness spans multiple functions and is written in multiple places… those are tough situations to manage without error, so carefully consider whether there’s a better way.

Often an object may be more appropriate than passing long-lived variables between functions, especially with multiple variables whose liveness durations are basically the same. If an object doesn’t make sense, minimize the amount of functions and lines a reader has to read in order to understand what the value could be.

Functional programming paradigms often push programmers towards keeping variable liveness short, even to the point of skipping the use of variables altogether. While there is a lot of elegant code that shows this working, you can definitely take things too far in this direction…

For long function chains or callbacks that stack up, breaking up the chain into smaller groups and using a well-named variable or helper function can go a long way in reducing the cognitive load for readers.

// which is easier and faster to read?

function funcA(graph) {

return graph.nodes(`node[name = ${name}]`)

.connected()

.nodes()

.not('.hidden')

.data('name');

}

// or:

function funcB(graph) {

const targetNode = graph.nodes(`node[name = ${name}]`)

const neighborNodes = targetNode.connected().nodes();

const visibleNames = neighborNodes.not('.hidden').data('name')

return visibleNames;

}

Is the second one marginally less efficient?

Yes.

Does it actually matter? No. Unless you’ve run a performance tool that tells you those specific lines are the problem.

As others have written, computers are fast and premature optimization is a bad thing.

Reuse of Familiar Code Patterns#

The third and final idea on variables is on reusing familiar code/variable shapes: basically suggesting you leverage familiarity and follow the Principle of Least Surprise when writing code.

Reusing code patterns wherever possible (without copy-pasting) helps because readers don’t have to think as hard about patterns they recognize, and you can call out departures from otherwise familiar structures with edifying variable names or comments.

A common example would be to stay consistent in your choice of how to write conditionals (e.g. pick one of the 6 getOddness shape variations from the top of this post and only use that shape in a particular codebase).

Taking this idea to its logical conclusion is basically just encouraging the use of templated or generic functions so that a reader doesn’t have to recognize that a pattern is being repeated across functions.

8 Patterns for Improving Code Readability#

Combining the points discussed so far and merging/removing duplicates where possible, we end up with a list of 8 factors:

- Line/Operator/Operand count: Make smaller functions with fewer variables and operators, they are easier to read

- Novelty: Avoid novelty in function shapes, operators, or syntactic sugars; prefer reusing common patterns in a codebase

- Grouping: Split series of long function chains, iterators, or comprehensions into logical groupings via helper functions or intermediate variables

- Conditional simplicity: Keep conditional tests as short as possible and prefer sequences of the same logical operator over mixing operators within a conditional

- Gotos: Never use

gotos unless you are following this one pattern, and even then only when the alternatives are worse - Nesting: Minimize nested logic (aka avoid large variations in indentation). If deep nesting is required, isolate it in a function instead of deeply nesting inside a larger function

- Variable distinction: Always use descriptive and visually distinct variable names; avoid variable shadowing

- Variable liveness: Prefer shorter liveness durations for variables, especially with regard to function boundaries

Each of these applies across languages and code format/styles, and can give objective measures to use in discussions about readability.

Conclusion#

We’ve covered a lot of ideas from a wide variety of sources, but in the end we collected a set of visually observable patterns that can help determine why certain code is harder to read.

To bring closure to the story at the beginning of this post, the codebase that was breaking my brain had several anti-patterns, specifically related to items 1, 2, 3, 6, and 8 in the list above.

It had long functions, used a wide mix of language constructs, and contained many function chains which should’ve been put in helper functions. As a side effect, the code’s many large functions ended up with a lot of nested complexity and long-lived variables too.

Despite the high quality of the code and the authors, we found more than one critical bug, including one that was pretty easy to see… but it had been missed, in my opinion because it was in the middle of a long and complex function that was difficult to reason about.

Since all of the patterns in this post focused on snippets and single functions, maybe in the future we’ll talk about interprocedural issues. If you want to hear more about complexity issues, find me on your social of choice and let me know, or reshare this post!

Til then, I’m just going to end with what a mentor once told me early in my career: “the person who is most likely to read your code a month from now is you.”

Selected References#

- Comparing Halstead metrics of the same algorithm in Python, C++, JavaScript and Java

- Typescript Playground for Halstead Metrics and Cyclomatic Complexity

- Radon: a Python package for complexity metrics

- Cognitive complexity whitepaper

- The Zen of Python

- Carnegie Mellon Software Engineering Institute: gotos are ok in this one case